Loading component...

Machine vision and contrast how automated vehicles see the roads

3M

3MNearly all new vehicles on the road are equipped with some form of advanced driver assistance systems (ADAS), including lane departure warning (LDW) and lane keep systems (LKS). In fact, the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) has required ADAS rear visibility technology on all vehicles weighing less than 10,000 lbs. sold in the U.S. since May 2018, and both the European Union and the United States will require all vehicles to have autonomous emergency-braking systems and forward-collision warning systems by 2020.

The advancement of ADAS technologies represents a significant step towards the production of fully connected and automated vehicles (CAVs). And in recent years, a growing number of automotive manufacturers have developed and produced CAVs. In several states and international cities—including California, Michigan, Paris, London and Beijing—autonomous vehicles are already sharing the road with human drivers. And by some estimates, 8 million self-driving vehicles will be sold annually by 2025.

There are numerous anticipated benefits of cars equipped with ADAS and CAVs, including fewer accidents, more free time and improved mobility. But they also present challenges when you’re planning the roadways of the present and the future.

To enhance roadway safety for human drivers, vehicles equipped with ADAS, and the driverless vehicles of the future, it’s important to understand how these vehicles will perceive and interpret their surroundings. Some might assume that the primary navigation method will utilize GPS and High Definition mapping tools. While these provide a powerful combination of data, they can be unreliable in certain conditions and may not deliver the precision or real-time data you need to ensure road safety.

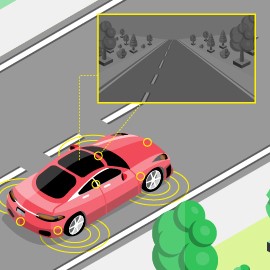

Currently, both ADAS and CAVs rely on machine vision for most driving functions. For machine vision to be safe and effective for these vehicles, your roads require infrastructure like highly detectable pavement markings and signage. Meanwhile, human drivers will continue to benefit from infrastructure that is easy to interpret and can be seen in a range of driving conditions.

What is Machine Vision?

Machine vision for ADAS and autonomous vehicles consists of mounted cameras and image sensors that feed data, in the form of digital images, to the vehicle’s image signal processor (ISP). The ISP then runs complex algorithms on that data and provides input to the vehicle’s main computer. The main computer can then send a warning to the driver or take control of the vehicle, depending on the vehicle’s level of autonomy.

Each automotive OEM uses their own proprietary algorithms for detecting and classifying objects around a vehicle. It might be helpful to think of the vehicle’s ISP as a “black box.” You don’t know exactly how it’s making decisions. But you can understand what types of data input the vehicle needs to remain safe and mobile, and some of the different methods it could use to interpret that information. Then you can design infrastructure that helps provide the best possible inputs so the vehicle can make critical decisions about its surroundings.

4 Ways Machine Vision Algorithms Detect Key Features

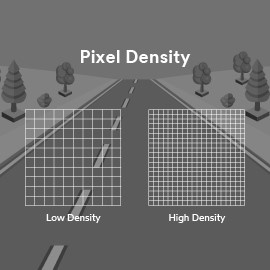

Let’s take a high-level look at how a machine vision algorithm will find critical features like pavement markings or pedestrians. We mentioned earlier that the vehicle’s cameras send digital images to the ISP. Each image is made up of a series of pixels (you’re likely familiar with the concept of pixels from computer monitors and TV screens). Each pixel is represented by a number that contains location data (e.g. spot in the upper right-hand corner of the picture) and intensity. If the pixel is dark, the computer will assign that pixel a value close to zero. If the pixel is light, it will assign a larger value, with a maximum value of 255 for absolute white. Contrast is achieved when light pixels are close to dark pixels (high numbers close to low numbers).

For a machine vision algorithm to detect a feature like a pavement marking, it needs to see contrast between the pavement marking’s pixels and the road’s pixels. Here are 4 examples of machine vision schemes commonly used to calculate contrast and detect features of interest like pavement markings.

1. Difference in Light and Dark Pixels. The simplest algorithms calculate the absolute difference between the values of adjacent pixels. The algorithm looks for large differences, which are interpreted to be features of interest such as the edges of pavement markings. The algorithm then makes decisions about road lanes using this information.

2. Edge Detection Scheme. A slightly more complex scheme calculates the rate of change of the pixel values in the image, then creates a graph with a linear slope. The algorithm is looking for a steep slope, indicating a more defined difference between light and dark. Again, this method is used to find edges.

3. Corner Detection Scheme. This scheme uses some of the same calculations as the Edge Detection Scheme. However, instead of simply calculating the pixel value rate of change in one direction, the algorithm simultaneously considers the rate of change over two directions. This helps the computer detect corners in its field of view, rather than edges.

4. Convolutional Neural Network (CNN), a form of machine learning. These are the most complex algorithms used by autonomous vehicles, and the direction many manufacturers are heading. This method uses machine deep learning to train the vehicle’s computer how to detect and classify objects—including pavement markings, other signs, pedestrians, etc.—in its field of view.

High Pavement Marking Contrast is Key

On all of these schemes, the vehicles’ ISPs are directly or indirectly performing a function known in the industry as “thresholding”—looking for values that meet a certain threshold, or minimum value. The algorithm will discard any information that doesn’t meet the required threshold.

When you’re planning your next roadway project, you need to consider this thresholding process. For a driverless vehicle or ADAS to see and understand the pavement markings on your roads, the markings need to provide enough contrast, regardless of the road surface or conditions, to make it past the ISP’s thresholding criteria. By installing pavement markings that are purposefully designed to deliver high contrast, you can provide human drivers, ADAS and autonomous vehicles with high-quality, reliably-understood inputs that will help keep your roads safer.

Planning for the Present, Looking to the Future

On every roadway project, it’s your goal to improve the safety and mobility of your roads. As you plan for the present and prepare for the future, you need to consider the needs of human drivers, vehicles with ADAS and self-driving vehicles. The transportation industry has always relied on highly visible and legible infrastructure, including high-contrast retroreflective signage and lines, to help keep human drivers safe. The need for highly detectable markings only increases for vehicles equipped with ADAS and autonomous vehicles.

Pavement markings specifically designed to deliver greater contrast on any road surface (from black asphalt to gray concrete) are easier to see for both people and machines, and will help you enhance road safety. Additionally, standardizing your pavement markings and investing in future infrastructure can save you time and money down the road.

Written by 3M.

.png?h=183&iar=0&w=290)