Greg Blackman explores the vision and AI technology transforming our roads, as the transport sector gathers for the Intertraffic Amsterdam

Amsterdam is building its smart credentials. The city’s government is working with businesses in the Zuidas commercial district to create a mobility-as-a-service app due to be released later this year. The idea is to encourage commuters to leave their cars behind and use alternative modes of transport, made easier by the app. The ultimate goal is that, by 2025, all of Amsterdam will have reliable, affordable and accessible modes of shared transport.

Mobility-as-a-service (MaaS) is one of the big trends at the Intertraffic Amsterdam show, from 21 to 24 April. The model is a shift from people relying on their own vehicles to one where transport is provided as a service. It relies on data, another trend topic at Intertraffic, and real-time data is something vision systems can provide. As part of the Intertraffic conference programme, under the theme of big data, representatives from Swisstraffic, Viscando, and Flir will give presentations about controlling traffic with vision and AI.

Speaking to Imaging and Machine Vision Europe, Viscando’s CEO, Amritpal Singh, said it wants to use the potential of computer vision and AI to bring objective and quantitative information to cities. Singh will be speaking on 22 April at Intertraffic’s theatre two at 4pm.

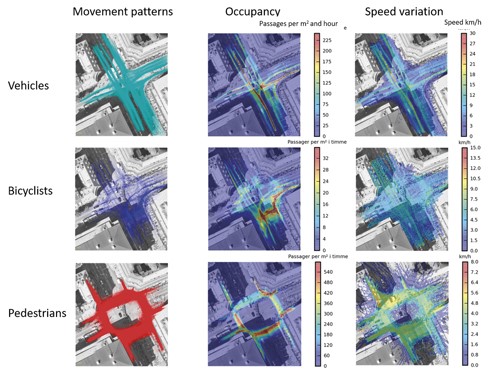

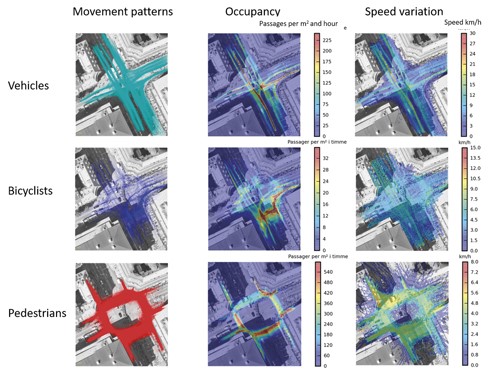

Viscando’s stereovision technology is used for monitoring traffic, but also to control traffic. The firm’s vision system can track traffic moving through a road intersection, or any open area, such as a shared space or the cargo bay of a logistics company.

The system is able to detect and track all kinds of motorised vehicle, along with bicycles and pedestrians, simultaneously. It can do this at 20Hz.

‘We get plenty of detailed data on how road users move through an intersection,’ Singh explained. ‘We can analyse the trajectories of road users and look at how people behave, at traffic signals for instance, or analyse conflict risk and measure the gaps people leave between vehicles.’

The camera generates a lot of detail about behaviour and interactions between road users, and its impact on traffic safety. The longer-term goal, according to Singh, is to use machine learning to predict the traffic needs of road intersections and connect intersections with each other. Viscando has two ongoing pilot projects in Sweden investigating this, and started a pilot in Norway this month (February). ‘There are a lot of possibilities to have a complete picture of how the intersection is working and providing data for optimising it,’ Singh said.

The pilot project in Stockholm, called the Multisensor project, is using the system to control the traffic. This is a live trial conducted together with the city. The project is hoping to show that it is possible to substitute induction loops for vision technology, to measure the number of vehicles coming in and out of the road junction, including pedestrians and cyclists. The Stockholm trial began in August and runs for a year.

Viscando has also been granted government funding for another project in Uppsala, setting up a complete solution to deliver a similar pilot as the one in Stockholm, but for a larger intersection.

Normally between one and four cameras are needed for each intersection, depending on the size of the junction. Viscando is able to provide multi-camera tracking, where any number of cameras covering an intersection behave as one system. The installation could use one sensor with a large field of view, but generally around three sensors with 90-degree fields of view are mounted, which gives more flexibility with regards to coverage and avoiding occlusions from trees or buildings.

The OTUS3D stereovision camera works in real time, using machine learning algorithms. Viscando’s technology complies with GDPR regulations, in that the camera doesn’t record or transmit video – all the trajectory data is extracted onboard the camera in real time. The data is analysed offline at the moment.

‘Sweden and Germany, in particular, have a very strict interpretation of the GDPR rules, which our system fits in well with,’ Singh said.

The real-time capability of the system means it is able to control traffic passing through an intersection. ‘In the short term we can substitute five to ten induction loops with a single 3D vision system and, if two or three systems are mounted, we can control the full intersection with non-intrusive, low-maintenance technology,’ Singh added. ‘It also gives the cities much more data about how the intersection is working, the length of queues and duration of waiting time, and being able to include pedestrians and cyclists in the same optimisation scheme.’

Rather than looking to optimise traffic flow according to the number of cars, one potential scheme could be to optimise it for the number of pedestrians or cyclists passing through the intersection, and that could vary over the day.

The camera can deliver real-world co-ordinates for each object because it’s a 3D system. That makes calibration between systems easier and more or less automatic, according to Singh. This also simplifies the inclusion of the traffic data into a city’s geographic information system.

Singh explained that the advantage of using 3D vision is that it gives the size of objects, no matter where they are in the field of view. It also means the speed is recorded automatically without the calibration needed when using a 2D camera. The 3D system can also track objects moving directly towards the camera, which would be difficult to do with a 2D camera.

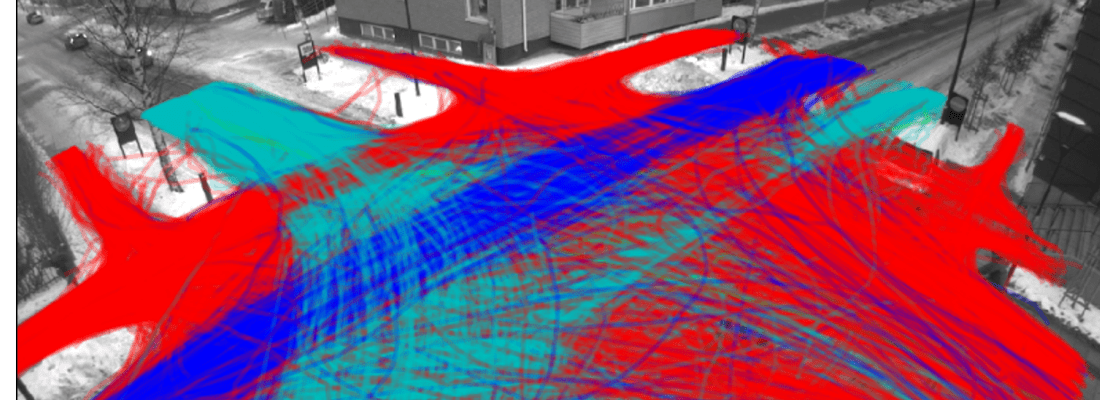

Visualisation of road user movements in one of Stockholm’s largest intersections. Several systems work in parallel, and road users are tracked through multiple fields of view. Three per cent of motorised vehicles run the red light; 20 per cent of cyclists do the same. Credit: Viscando

In addition, when the algorithm classifies an object, for example, it can take into account the geometry of the object using 3D vision, without being dependent on stable illumination – the 3D camera is robust to changes in lighting.

‘Real-time processing is important, so we combine both shallow and deep learning in order to get the performance, both in terms of accuracy and the real-time nature of the system, without having very expensive hardware,’ Singh explained. The system runs on a Linux computer together with a GPU.

The raw data output from the camera is tracking data at 20Hz, which contains information about the type of road user, the position, the time, and additional metadata.

At Intertraffic, Viscando will show its traffic monitoring capability, as well as how the cameras can be used for real-time control based on vision data.

Swisstraffic will also present a new vision system at Intertraffic with AI built in. Similar to Viscando’s camera, the Swiss AI sensor is able to evaluate images in real time and provide vehicle, bicycle and pedestrian tracking onboard the camera. It’s also GDPR-compliant, as there’s no image stored and there’s no video streaming. The AI software gives 95 per cent accuracy.

The AI camera has been installed on roadways in a trial project in Bern, Switzerland, conducted with Swisstraffic’s partner Elektron, which makes smart lighting systems. The camera was used, among other things, to control streetlamps depending on the volume of traffic – when there’s no traffic on the road, streetlamps can be switched off to save energy and reduce light pollution.

‘Machine learning offers us a huge opportunity for traffic management,’ commented Maria Riniker, chief communication officer at Swisstraffic. ‘Adding these sensors could make road junctions safer. The device can detect near-misses between road users, for example; these kinds of statistic are not normally recorded.’

Swisstraffic showed the AI system for the first time at the Smart City Expo World Congress in Barcelona last year, and now at Intertraffic Amsterdam in April. Swisstraffic’s Alain Bützberger will present the firm’s technology at Intertraffic on 22 April, summit theatre two, 2pm.

Vitronic is also building AI into its automated toll collection systems, which will be presented at Intertraffic Amsterdam. By using a convolutional neural network alongside classic image processing, Vitronic engineers were able to improve recognition rates for reading number plates, as well as classifying vehicles, according to René Pohl, product manager for tolling at Vitronic. He said it is particularly important for customers that every vehicle is detected and recognised; otherwise they will lose revenue.

Vitronic is also working with the University of Applied Sciences in Darmstadt, Germany, where researchers are using data from existing and new traffic enforcement and management infrastructure, combined with environmental data, either as an empirical database for static traffic models, or as input data for dynamic traffic models.

Autonomous driving

The tracking data Viscando generates is also valuable for the automotive industry, both for traffic safety and for autonomous vehicle development. ‘We are lucky enough to be situated in Gothenburg, where Volvo Cars and Volvo Trucks have their headquarters, along with Volvo’s suppliers Veoneer, Zenuity and Autoliv,’ said Singh. Those suppliers all develop technology for ADAS and autonomous driving.

Viscando has a good collaboration with Volvo, Veoneer and Zenuity, as well as Ericsson. ‘These companies use our data to understand how road users behave and interact, so they can consider how autonomous vehicles should be developed and what kind of situations they will end up in,’ explained Singh.

He went on to say that an autonomous vehicle is a safety-critical system, and because its operation is based on machine learning, there is going to be some kind of regression involved. ‘All machine learning methods tend to concentrate on main features and remove any outliers,’ he said. ‘But for safety-critical systems, you can’t really remove the outliers because, in most cases, those are very important.’

Viscando collects data that identifies the type of outliers that road users might encounter in different situations. Automotive firms can then take that into account when simulating and framing their algorithms for autonomous driving. The data means that driving simulations can be based on real-world data for 95 per cent of the movement of vehicles, with only the last 5 per cent extrapolated, according to Singh.

Viscando has a three-year project with Volvo Cars, Veoneer, Autoliv and Chalmers University of Technology, where Viscando cameras will be collecting data for some parts of the project.

Viscando will be expanding a lot this year, Singh said. It will offer a real-time traffic control system for small intersections by the middle of 2020. The firm can already deliver a system for controlling cycle paths. Viscando is also working on making some of the analysis functionality that’s currently run offline available as online, real-time functionality.

This article was provided by Imaging and Machine Vision Europe. For more content related to intelligent vision technology, visit www.imveurope.com